Before I start, I would like to point out

#855549 . This is a normal/wishlist bug I have filed against apt, the command-line package manager. I sincerely believe having a history command to know what packages were installed, which were upgraded, which were purged should be easily accessible, easily understood and if the output looks pretty, so much the better. Of particular interest to me is having a list of new packages I have installed in last couple of years after jessie became the stable release. It probably would make for some interesting reading. I dunno how much efforts would be to code something like that, but if it works, it would be the greatest. Apt would have finally arrived. Not that it s a bad tool, it s just that it would then make for a heck of a useful tool.

Coming back to the topic on hand, Now for the last couple of weeks we don t have water or rather pressure of water. Water crisis has been hitting Pune every year since 2014 with no end in sight. This has been reported in

newspapers addendum but it seems it has been felling on deaf ears. The end result of it is that I have to bring buckets of water from around 50 odd metres.

It s not a big thing, it s not like some women in some villages in Rajasthan who have to

walk in between 200 metres to 5 odd kilometres to get potable water or

Darfur, Western Sudan where women are often kidnapped and sold as sexual slaves when they get to fetch water. The situation in Darfur has been shown quite vividly in

Darfur is Dying . It is possible that I may have mentioned about Darfur before. While unfortunately the game is in flash as a web resource, the most disturbing part is that the game is extremely depressing, there is a no-win scenario.

So knowing and seeing both those scenarios, I can t complain about 50 metres. BUT .but when you extrapolate the same data over some more or less 3.3-3.4 million citizens, 3.1 million during 2011

census with a conservative 2.3-2.4 percent population growth rate according to

scroll.in.

Fortunately or unfortunately, Pune Municipal Corporation elections were held today. Fortunately or unfortunately, this time all the political parties bought majorly unknown faces in these elections. For e.g. I belong to

ward 14 which is spread over quite a bit of

area and has around 10k of registered voters.

Now the unfortunate part of having new faces in elections, you don t know anything about them. Apart from the

affidavits filed, the only thing I come to know is whether there are criminal cases filed against them and what they have shown as their wealth.

While I am and should be thankful to

ADR which actually is the force behind having the collated data made public. There is a lot of untold story about political push-back by all the major national and regional political parties even when this bit of news were to be made public. It took major part of a decade for such information to come into public domain.

But for my purpose of getting

clean air and water supply 24 7 to each household seems a very distant dream. I tried to connect with the corporators about a week before the contest and almost all of the lower party functionaries hid behind their political parties manifestos stating they would do the best without any viable plan.

For those not knowing, India has been

blessed with 6 odd national parties and about 36 odd regional parties and every election some 20-25 new parties try their luck every time.

The problem is we, the public, don t trust them or their manifestos. First of all the political parties themselves engage in mud-slinging as to who s copying

whom with the manifesto.Even if a political party wins the elections, there is no *real* pressure for them to follow their own manifesto. This has been going for many a year. OF course, we the citizens are to also blame as most citizens for one reason or other chose to remain aloof of the process. I scanned/leafed through all the manifestos and all of them have the vague-wording we will make Pune tanker-free without any implementation details. While I was unable to meet the soon-to-be-Corporators, I did manage to meet a few of the assistants but all the meetings were entirely fruitless.

I asked why can t the city follow the Chennai model. Chennai, not so long ago was at the same place where Pune is, especially in relation to water. What happened next, in 2001 has been beautifully chronicled in

Hindustan Times . What has not been shared in that story is that the idea was actually fielded by one of Chennai Mayor s assistants, an IAS Officer, I have forgotten her name, Thankfully, her advise/idea was taken to heart by the political establishment and they drove RWH.

Saying why we can t do something similar in Pune, I heard all kinds of excuses. The worst and most used being Marathas can never unite which I think is pure bullshit. For people unfamiliar to the term,

Marathas was a warrior clan in Shivaji s army.

Shivaji, the king of Marathas were/are an expert tactician and master of guerilla warfare. It is due to the valor of Marathas, that we still have the

Maratha Light Infantry a proud member of the Indian army.

Why I said bullshit was the composition of people living in Maharashtra has changed over the decades. While at one time both the

Brahmins and the Marathas had considerable political and population numbers, that has changed drastically. Maharashtra and more pointedly, Mumbai, Pune and Nagpur have become immigrant centres. Why just a decade back, Shiv Sena, an ultra right-wing political party used to play the Maratha card at each and every election and heckle people coming from Uttar Pradesh and Bihar, this has been documented as the

2008 immigrants attacks and 9 years later we see Shiv Sena trying to field its candidates in

Uttar Pradesh. So, obviously they cannot use the same tactics which they could at one point of time.

One more reason I call it bullshit, is it s a very lame excuse. When the Prime Minister of the country calls for demonetization which affects 1.25 billion people, people die, people stand in queues and is largely peaceful, I do not see people resisting if they bring a good scheme. I almost forgot, as an added sweetener, the Chennai municipality said that if you do RWH and show photos and certificates of the job, you won t have to pay as much property tax as otherwise you would, that also boosted people s participation.

And that is not the only solution, one more solution has been outlined in Aaj Bhi Khade hain talaab written by just-deceased Gandhian environmental activist Anupam Mishra. His Book can be downloaded for free at

India Water Portal . Unfortunately, the said book doesn t have a good English translation till date. Interestingly, all of his content is licensed under public domain (CC-0) so people can continue to enjoy and learn from his life-work.

Another lesson or understanding could be taken from Israel, the father of the modern micro-drip irrigation for crops. One of the things on my bucket lists is to visit Israel and if possible learn how they went from a water-deficient country to a water-surplus one.

Which brings me to my second conundrum, most of the people believe that it s the Government s job to provide jobs to its people. India has been experiencing jobless

growth for around a decade now, since the 2008 meltdown. While India was lucky to escape that, most of its trading partners weren t hence it slowed down International trade which slowed down creation of new enterprises etc. Laws such as the

Bankruptcy law and the upcoming

Goods and Services Tax . As everybody else, am a bit excited and a bit apprehensive about how the actual implementation will take place.

Even International businesses has been found wanting. The latest example has been Uber and Ola. There have been

protests against the two cab/taxi aggregators operating in India. For the millions of jobless students coming out of schools and Universities, there aren t simply enough jobs for them, nor are

most (okay 50%) of them qualified for the jobs, these 50 percent are also untrainable, so what to do ?

In reality, this is what keeps me awake at night. India is sitting on this ticking bomb-shell. It is really, a miracle that the youths have not rebelled yet.

While all the conditions, proposals and counter-proposals have been shared before, I wanted/needed to highlight it. While the issue seems to be local, I would assert that they are all glocal in nature. The questions we are facing, I m sure both developing and to some extent even developed countries have probably been affected by it. I look forward to know what I can learn from them.

Update 23/02/17 I had wanted to share about Debian s Voting system a bit, but that got derailed. Hence in order not to do, I ll just point towards 2015

platforms where

3 people vied for DPL post. I *think* I shared about DPL voting process earlier but if not, would do in detail in some future blog post.

Filed under:

Miscellenous Tagged:

#Anupam Mishra,

#Bankruptcy law,

#Chennai model,

#clean air,

#clean water,

#elections,

#GST,

#immigrant,

#immigrants,

#Maratha,

#Maratha Light Infantry,

#migration,

#national parties,

#Political party manifesto,

#regional parties,

#ride-sharing,

#water availability,

Rain Water Harvesting

Before I start, I would like to point out

Before I start, I would like to point out  I asked why can t the city follow the Chennai model. Chennai, not so long ago was at the same place where Pune is, especially in relation to water. What happened next, in 2001 has been beautifully chronicled in

I asked why can t the city follow the Chennai model. Chennai, not so long ago was at the same place where Pune is, especially in relation to water. What happened next, in 2001 has been beautifully chronicled in  Which brings me to my second conundrum, most of the people believe that it s the Government s job to provide jobs to its people. India has been experiencing jobless

Which brings me to my second conundrum, most of the people believe that it s the Government s job to provide jobs to its people. India has been experiencing jobless  Even International businesses has been found wanting. The latest example has been Uber and Ola. There have been

Even International businesses has been found wanting. The latest example has been Uber and Ola. There have been  Welcome to gambaru.de. Here is my monthly report that covers what I have been doing for Debian. If you re interested in Android, Java, Games and LTS topics, this might be interesting for you.

Debian Android

Welcome to gambaru.de. Here is my monthly report that covers what I have been doing for Debian. If you re interested in Android, Java, Games and LTS topics, this might be interesting for you.

Debian Android

Whilst there is an in-depth report forthcoming, the

Whilst there is an in-depth report forthcoming, the  Previously:

Previously:

Here is my monthly update covering what I have been doing in the free software world (

Here is my monthly update covering what I have been doing in the free software world (

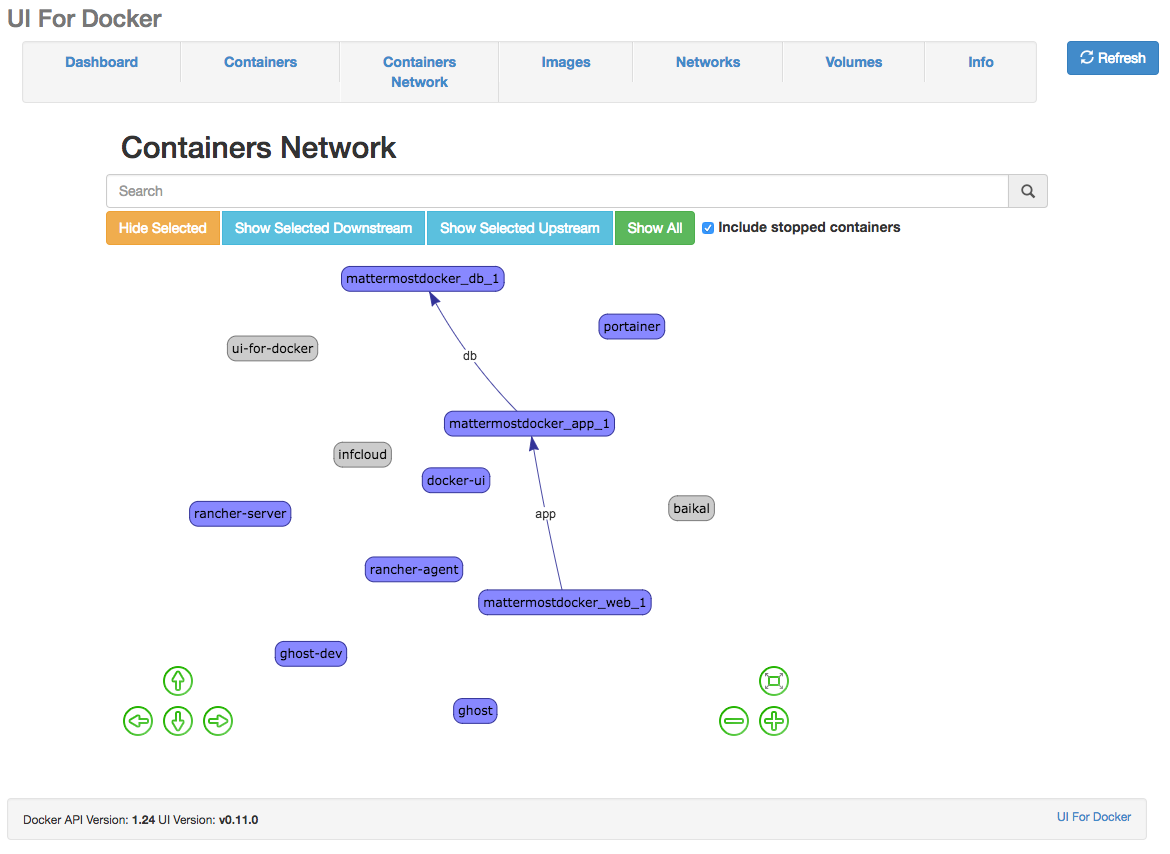

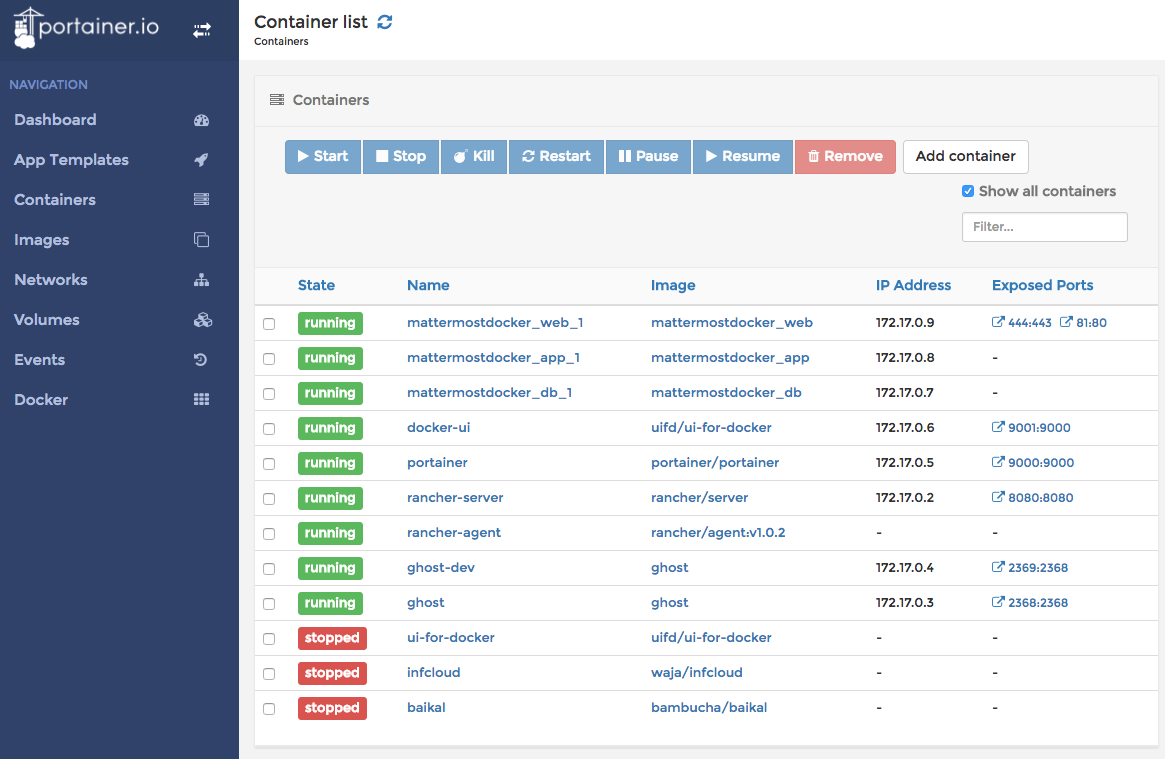

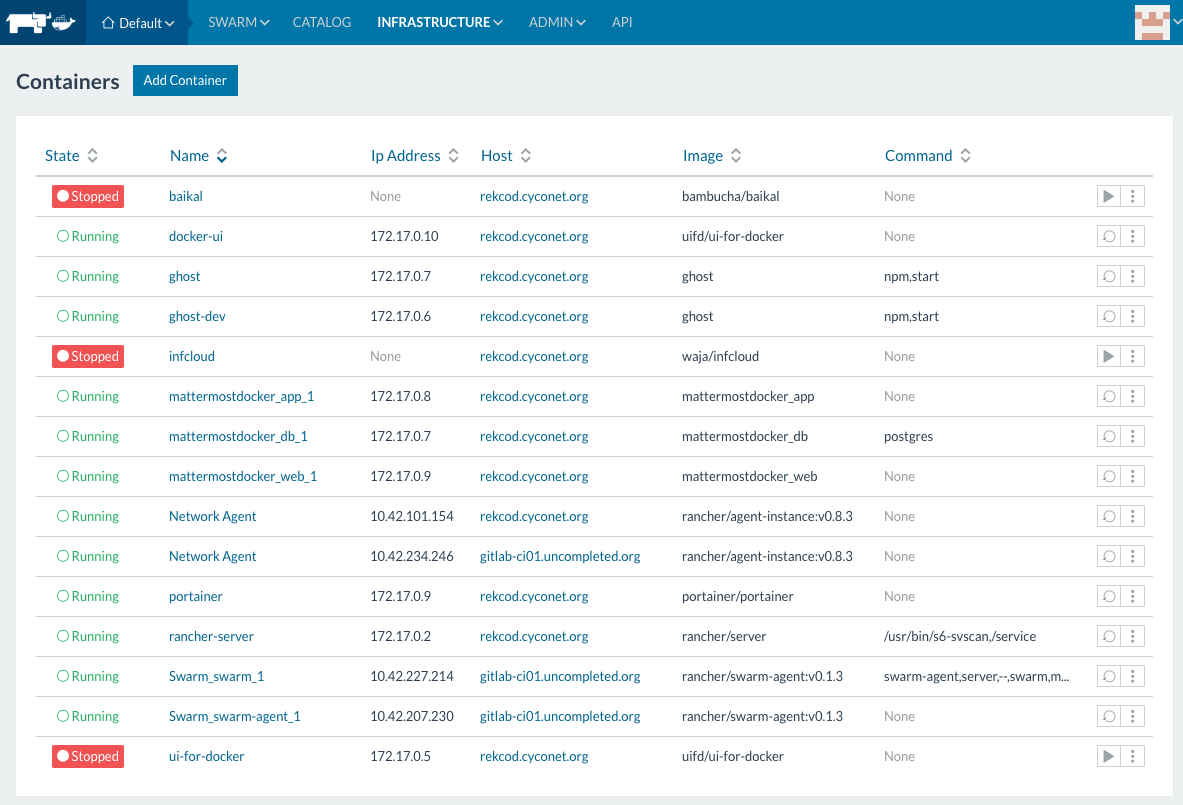

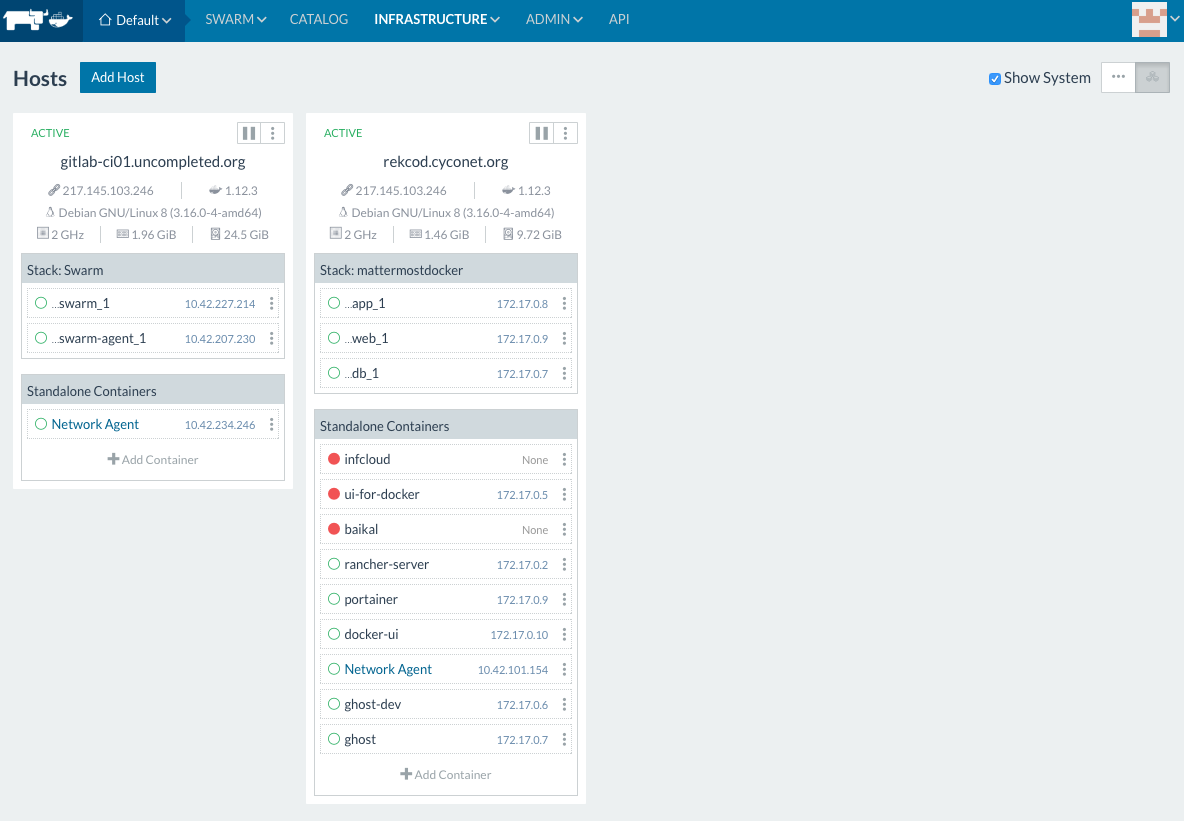

Since some time everybody (read developer) want to run his new

Since some time everybody (read developer) want to run his new

For the use cases, we are facing,

For the use cases, we are facing,

Jessie was released one year ago now and the Java Team has been busy preparing

the next release. Here is a quick summary of the current state of the Java packages:

Jessie was released one year ago now and the Java Team has been busy preparing

the next release. Here is a quick summary of the current state of the Java packages:

We're excited to announce that Debian has selected 29 interns to work with us

this summer: 4 in

We're excited to announce that Debian has selected 29 interns to work with us

this summer: 4 in